Robokeeper - A Robotic Goalkeeper

Team: Jonny Bosnich, Joshua Cho, Lio Liang, Marco Morales, Cody Nichoson

Introduction

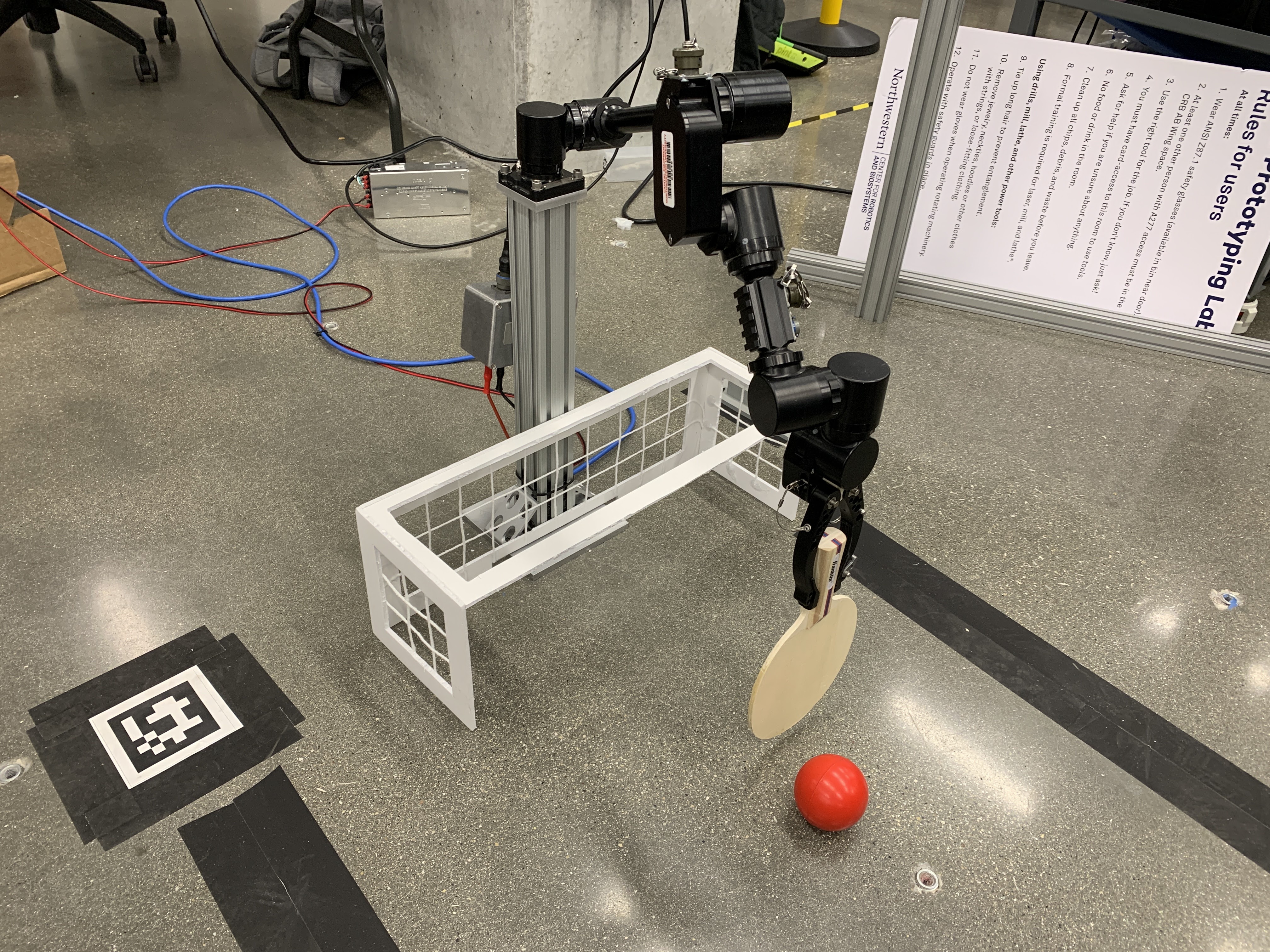

Robokeeper is a robotic arm that uses computer vision to identify a moving ball and manipulates its end-effector to deflect it before it crosses the goal line. This project utilizes ROS and OpenCV to solve problems such as complex frame transformations, motion planning, perception, and traject estimation.

Hardware

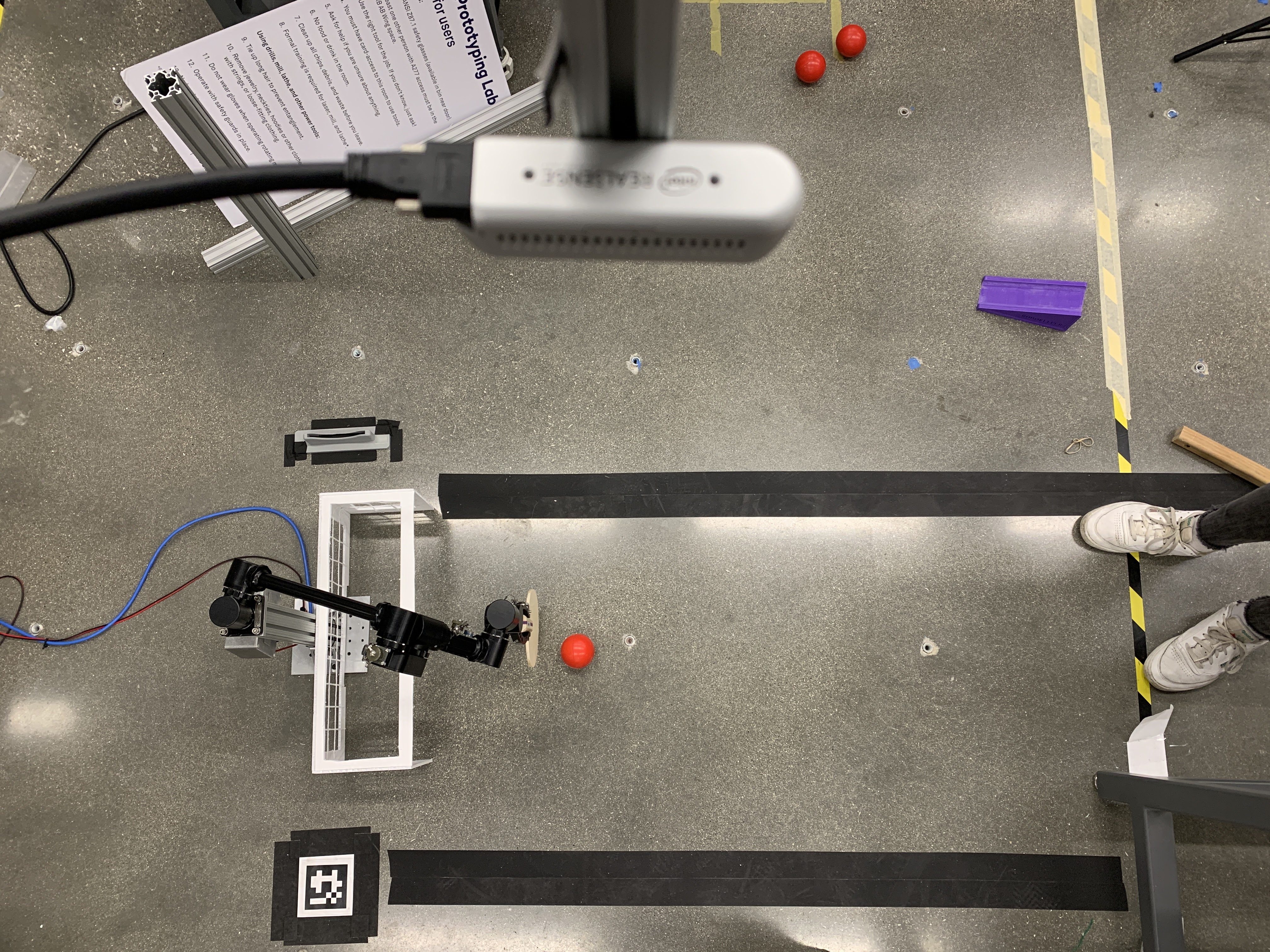

The Robokeeper ROS package controls the HDT Adroit 6-DOF Manipulator, a light-weight yet very strong robot manipulator arm with no joint limits. It utilizes an Intel Realsense D435 depth camera suspended from the ceiling pointing directly down at the playing field.

Software

The package contains three nodes that perform three distinct tasks siultaneously to accomplish the overall goal. The perception node processes the images captured by the camera and determines the location of the ball. The transformations node transforms the frame of reference so that the location of the ball is relative to the robot's frame. Finally, the motion control node moves the robot arm to the desired location based on the ball's position and velocity.

Perception

The perception node is responsible for handling the data collected from the Intel RealSense camera utilized to identify and locate the objects that our robot is tasked with blocking. It contains a CV bridge to enable OpenCV integration with ROS, subscribes to the RealSense's camera data, and ultimately publishes 3-dimensional coordinate data of the centroid of the object of interest (a red ball for our purposes).

In order to identify the ball, video frames are iteratively thresholded for a range of HSV values that closely match those of our ball. Once the area of interest is located, a contour is created around its edges and the centroid of the contour located. This centroid can then be treated as the location of the ball in the camera frame and published appropriately.

Transforms

Knowing where the ball is relative to the camera is great, but it doesn't help the robot locate the ball. In order to accomplish this, transformations between the camera frame and the robot frame are necessary. This node subscribes to both the ball coordinates from the perception node and AprilTag detections, and publishes the transformed ball coordinates in the robot frame.

In order to complete the relationship between the two frames, an AprilTag with a known transformation between itself and the baselink of the robot (positioned on the floor next to the robot) was used. Using the RealSense, the transformation between the camera frame and the AprilTag can then also be determined. Using these three frames and their relationships, the transformation between coordinates in the camera frame and coordinates in the robot frame can finally be determined.

Motion Control

This node provides the core functionality of the robokeeper. Primarily, it subscribes to the topic ontaining the ball coordinates in the robot frame and contains a number of services utilized to interact with its environment in several ways.

The main service used is /start_keeping. As the name suggests, this service allows the robot to begin interpreting the ball coordinates and attempting to intersect it at the goal line. The robot predicts the ball's final position at the goal line using a control loop that compares the ball positions in two consecutive camera frames, then uses appropriate PD gains derived experimentally. Appropriate joint trajectory commands are sent to the robot through a mix of MoveIt! and direct joint publishing (depending on the service called) in order to accomplish the task. This node also keeps track of goals scored by determining if the ball has entered the net.