UAV Autonomous Precision Landing

Introduction

This project is part of my Mechanical Engineering Capstone project, in which my team designed, built, and tested an autonomously landing drone-robot power exchange system Professor Sridhar Krishnaswamy of Northwestern University’s Department of Mechanical Engineering. In this project, the localization of the drone is handled using visual inertial odometry (VIO), instead of the more traditional approach of using GPS sensors like on most hobbyist drones. This camera-based approach provides accurate and robust GPS-less localization and navigation. The drone uses AprilTag to detect landing target and perform precision landing

Hardware

The list of hardware used for this project is shown below:

- Holbro X500 V2 frame

- Pix32 v5 flight controller and carrier board

- Raspberry Pi 4B 8GB RAM

- 32GB MicroSD card

- Pisugar2 Plus

- Intel RealSense T265 Tracking Camera

- mRo Sik Telemetry Radio V2 915Mhz

- FrSky Taranis Receiver X8R

- 4S 50C 6000mAh Li-Po battery

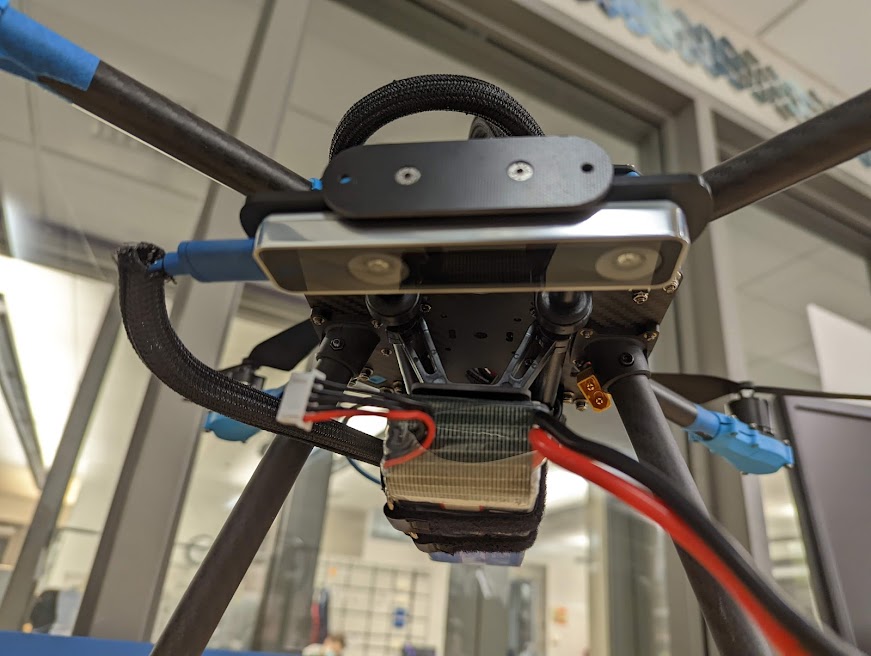

The main sensor used for drone position tracking is the Intel RealSense T265 Tracking Camera, a powerful localization and mapping device popular in the robotics research community. It is lightweight, has a small profile, and has built-in chip sets dedicated to V-SLAM, making it optimal for use on a drone with a small scale companion computer. The RealSense tracking camera is mounted at the front of the drone, facing downwards.

Raspberry Pi 4B was chosen as the onboard companion computer, as it was the economical option that has USB 2.0 capability needed to receive images from the RealSense camera. The Pi requires a regulated 5V power supply at up to 3.0A, which cannot be achieved with the drone's built-in voltage regulator. Therefore, the team decided to power the Raspberry Pi with a dedicated battery pack with integrated safety circuit, called PiSugar 2 Plus.

The onboard flight controller is Holybro Pix32 v5, a variant version of the popular Pixhawk 4 flight controller developed by the same company.

Software Installations

This section outlines the software requirements for achieving VIO navigation

Raspberry Pi 4B- 16GB+ microSD card

- Ubuntu Server 20.04.4 LTS

- ROS Noetic - latest distribution of Robot Operating System

- librealsense - RealSense libraries and required dependencies

- realsense-ros - ROS wrapper for RealSense devices

- mavros - ROS tool for receiving and sending MAVLink messages

- vision_to_mavros - ROS nodes and launchfiles for converting vision data from camera to MAVLink compatible messages

- apriltag_ros - ROS wrapper for AprilTag 3 visual fiducial detector

- Latest stable version of ArduPilot Copter firmware

- ROS Noetic

- QGroundControl - Flight control and mission planning software for MAVLink enabled drone.

System Overview

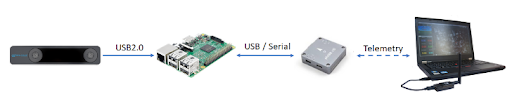

Below image shows a high level overview of how the hardware components of the drone communicate with each other.

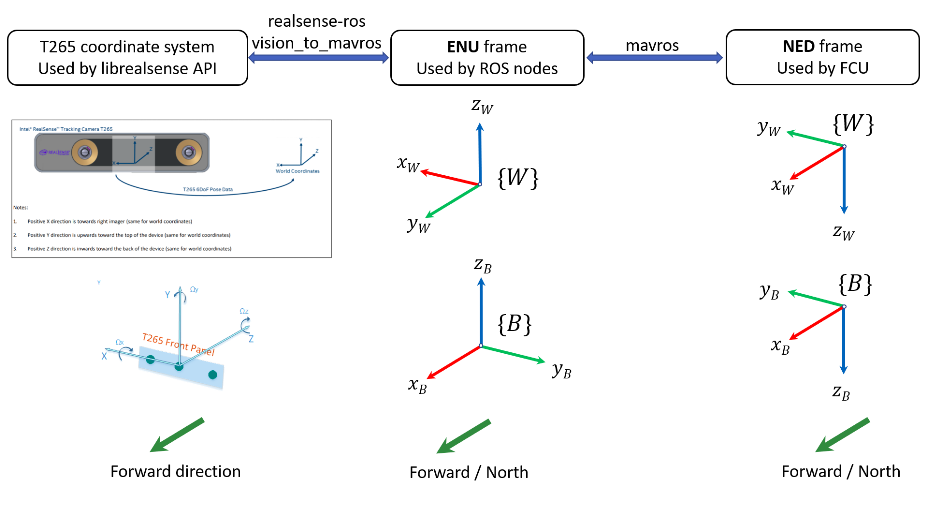

Data on the drone’s position is collected from the camera as a raw image stream. This data is processed onboard the camera into position and orientation coordinates relative to the camera’s frame. This /tf data is sent to the Raspberry Pi computer that uses a custom-written ROS package to further process this data into MAVLink language that is readable by the flight controller. During this process, the position data is transformed to match the coordinate frame used by the flight control and the ground control software (NED convention, where x is point to East direction, y is pointing to the North, and z is pointing up).

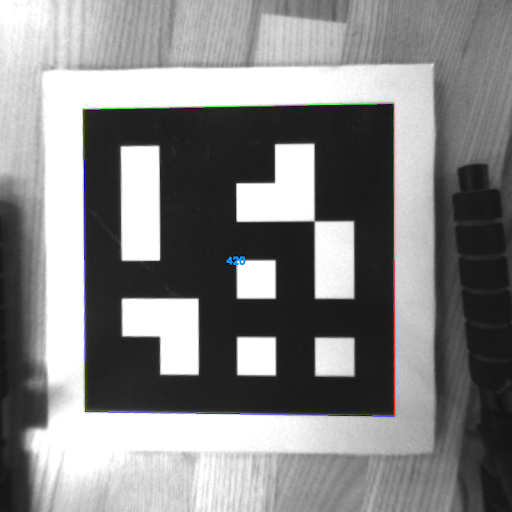

The processed data is sent to the flight controller, which then makes a decision to maintain stable flight. Data streams are also relayed back to the ground station via a wireless communication protocol (Mavlink). Furthermore, the raw image from the camera’s fisheye lenses is processed into a rectified image within Raspberry Pi using computer vision algorithms. An AprilTag detection algorithm then searches for the unique grayscale pattern within the rectified image to determine whether the AprilTag is in the frame.

Localization & Navigation

Proper real-time transformation of camera pose to MavLink vision_pose_estimate, and serial communication between RPi and FCU via MAVlink, were validated using manual flight of the drone. The drone's pose estimate was plotted in RViz.

The drone can also be controlled from graphical user interface on QGroundControl, using waypoint navigation. The state of the drone is continuously broadcasted to QGroundControl through 915mHz SiK telemetry radio.

Precision Landing

Integrating AprilTag detection functionality to localization and navigation, the team was successful in automating the precision landing process of the drone. Using a 100 by 100 mm AprilTag, the landing target is dynamically updated and sent to the FCU at 8-15Hz. Upon multiple tests, the team decided to choose landing speed of 8cm/s.